Laleh Pouyan tries to minimise the cost while maximising the speed of high-performance computing (HPC) used in weather forecasting. She will present a poster on her work at the ECMWF workshop on HPC in meteorology from 9 to 13 October 2023.

Laleh studied computer science engineering in Iran after she developed an interest in computer programming as early as her high-school years. This was followed by a master's focused on fault tolerance and reliability in computing, which also familiarised her with HPC.

During her PhD at the Forschungszentrum Jülich in Germany, she could for the first time use an HPC facility. “The project was about finding the best architecture for the computing demands of applications that explore the genomes of single cells,” she says.

In doing this project, she learnt to collaborate with scientists from different disciplines. This stood her in good stead when she started her position at ECMWF in 2022.

Use in digital twins

Laleh’s work concerns improving the computational speed and scalability of the digital twins ECMWF is developing as part of its role in the EU’s Destination Earth (DestinE) initiative. DestinE is developed by the European Space Agency (ESA), the European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT), and ECMWF.

Digital twins are digital replicas of highly complex Earth systems. The first two digital twins are about weather-induced and geophysical extremes and climate adaptation.

“My work is intended to be of benefit for those digital twins by improving their computational performance and efficiency.”

DestinE’s digital twins will provide near-real-time, highly detailed and constantly evolving replicas of Earth.

Mini-applications

At ECMWF, Laleh investigates the performance and efficiency of mini-applications that are extracted from the Centre’s Integrated Forecasting System (IFS) across a wide array of processor architectures that make up contemporary HPC systems.

The goal is to arrive at an implementation that maximises the speed of the application while minimising its cost in terms of energy use on a given hardware architecture.

The mini-applications come from the core functionality of the IFS. They may, for example, concern the cloud microphysics scheme or the radiation scheme.

“They are extracted from the IFS so that it becomes possible to benchmark them more easily,” Laleh explains. Benchmarking means studying which part of the mini-applications take the most time, and for what reason.

Possible reasons include delays in the memory-dominant part, the I/O-dominant part or the computing-dominant part. “After our investigations, we can find some kind of optimisation in the code or the architecture,” she says.

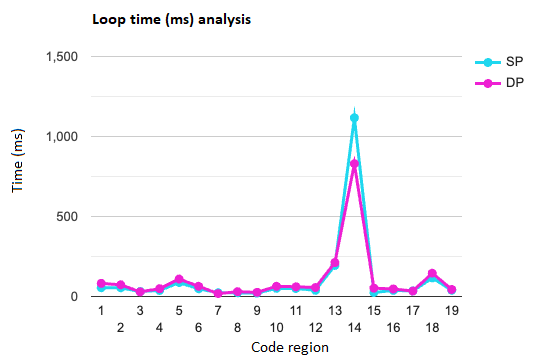

Performance analysis to identify the hot-spot regions of the cloud microphysics scheme in the IFS (CLOUDSC) for single-precision (SP) and double-precision (DP) versions.

Vectorisation

Currently, Laleh is porting parts of the IFS code that she investigates to a special architecture called a vector processor to examine it. The computational node she uses in her experiments acts like a small supercomputer. “It has eight vector engines (VEs), which are a form of CPU, and each VE has eight vector cores and six high-bandwidth memory modules. Each vector core is a powerful single core.”

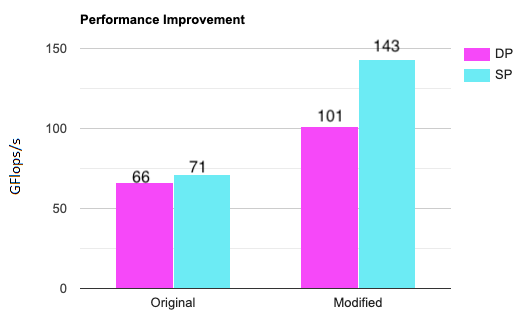

After porting the code into the new architecture, she changes the input and parameters and uses a vectorisation technique to improve the performance of the application. “Performance for us means the execution time,” she explains. “We can change the configuration to reduce that time, for example from 100 milliseconds to 50 or 25 milliseconds.”

As part of the process, it can be established to what extent the execution time can be reduced by increasing the number of vector cores. Another issue to be considered is the application’s memory or I/O bandwidth requirements. “We may have to consider some modifications of the architecture to ensure the application can run better,” Laleh says.

This chart shows some of the performance improvement achieved by Laleh’s work. DP stands for double precision and SP stands for single precision.

To find things to fine-tune, Laleh looks at the processes which need most time in an application. “That part is a good candidate for modification,” she says. “However, we are just exploring how things could be done. Implementation is a next step down the road.”