Simon McIntosh-Smith, Professor of High Performance Computing, Head of the HPC Research Group, University of Bristol, and PI for the Isambard project. Follow @simonmcs on Twitter for more news from the HPC research group in Bristol.

Following Peter Bauer’s blog on scalability in this series on high-performance computing (HPC), here I discuss the emerging challenge of greater diversity in computer architectures – set to be another key topic at ECMWF’s HPC Workshop in September.

In the past decade we have been used to the dominance of a single vendor in HPC processors: Intel. With their now ubiquitous x86 architecture, Intel processors currently power 95% of the systems in the June 2018 Top500 list. However, 2018 has seen the emergence of viable alternatives to this status quo.

AMD’s EPYC architecture is winning plaudits for its performance and cost competitiveness, while Arm-based HPC-optimised CPUs, such as Cavium’s ThunderX2, have also emerged as strong competition.

IBM’s POWER9 has similarly made room for itself at the top of the Top500, powering the USA’s Summit and Sierra NVIDIA Volta GPU machines. Fujitsu recently announced the first technical details of their Post-K CPU, a 48-core monster, sporting wide vector units and a high bandwidth memory system faster than even the latest GPUs.

And that’s not to mention NEC’s exciting new vector engine, or the 260-core Sunway CPUs in the TaihuLight supercomputer at #2 in the Top500.

What will the increased diversity in computer architectures mean for the numerical weather prediction community?

First, the good news. More choice means more competition which, in turn, brings lots of benefits. In a more competitive landscape, we see a faster rate of innovation, with better systems for us to buy and use. Greater competition also results in better price competitiveness, so we will be able to deliver more science within our fixed budgets.

Now the not-so-good news. Greater diversity in computer architectures can create a big challenge for scientific software developers. Even porting software between architectures that seem very similar, even between one generation of x86 and the next, can be more burdensome than one might expect. Porting between different instruction sets, such as x86 and Arm, may not be a much bigger step, especially in an age where most codes rely on standard C/C++ or Fortran compilers, but highly optimized codes with particular expectations of SIMD widths or cacheline sizes may cause unexpected problems. The challenge is significantly greater when porting from one kind of architecture to a very different one, such as from a CPU to a GPU, as anyone who’s tried this for a real production code can attest.

The common pitfall that needs to be avoided is to assume that your next target architecture is the only architecture that will ever matter. History clearly shows this is never true, but if one were to believe that the next version of a code only ever had to run on a certain CPU architecture or a specific GPU, then that code immediately suffers from a major disadvantage: it becomes locked into a single architectural family, and subject to the fortunes of that choice. Once locked in to one vendor or architecture, a code may be unable to exploit a big advance in another area, such as significantly faster or cheaper processors from another vendor, or may suffer from a processor line being delayed or cancelled altogether, as with Intel’s recent termination of the Xeon Phi roadmap.

In order to be in a strong position to exploit the benefits brought by a more diverse landscape of processors, scientific software needs to be portable – in terms of both function and performance. By performance portable, we mean that the code would perform both “well” and “similarly well” across the range of target architectures; this might mean the code achieves similar fractions of peak memory bandwidth if bandwidth bound, or it might achieve similar fractions of peak FLOP/s if compute bound etc.

There’s growing evidence that it is possible to write performance portable scientific code, but it doesn’t happen by default. One has to avoid vendor-specific parallel programming languages, such as CUDA and OpenACC. Languages such as OpenMP 4.5, Kokkos, Raja and SYCL all support developing performance portable codes across a wide range of platforms. Alternatively, domain specific languages (DSLs), or other techniques for generating the required code from a higher-level specification, may be appropriate, as several high-profile projects such as GridTools and PSyclone, have shown in the numerical weather prediction field. Once the language or DSL has been chosen, careful design is still required to achieve the desired performance portability.

Investigating new computer architectures

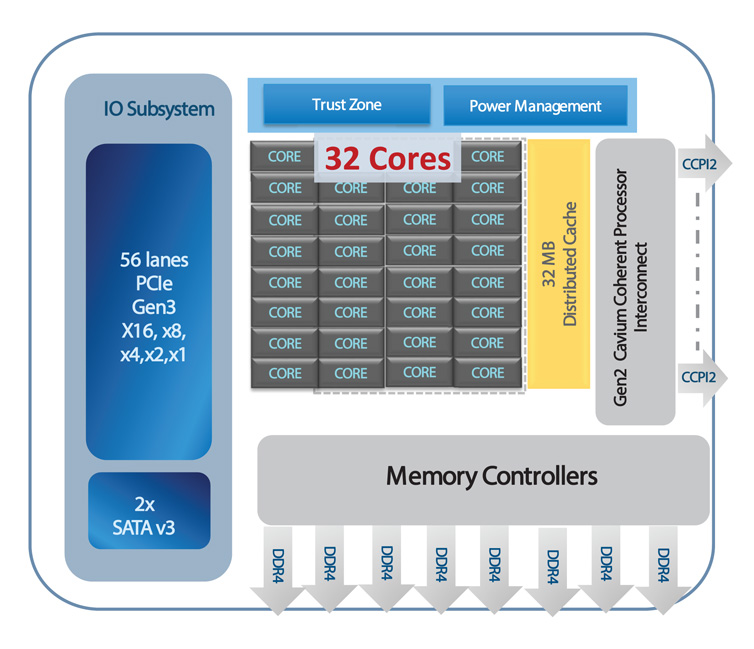

To get a head start in trying to understand the relevance of some of the newest computer architectures to emerge – Arm-based CPUs optimized for HPC – the Met Office and the GW4 Alliance recently teamed up to start the Isambard project. Isambard will be the world’s first production Arm-based supercomputer. Designed and built by Cray, Isambard will sport 168 nodes, each using 32-core Cavium ThunderX2 Arm-based CPUs in dual socket form, to provide 10,752 cores in total. Isambard will be used for extensive testing to determine whether Arm-based CPUs can deliver significant performance and cost advantages over other approaches.

Schematic overview of the Cavium ThunderX2 processor used in the Isambard project. Each processor contains up to 32 custom ARMv8.1 cores. Image: Cavium, Inc.

A wide range of application areas are being explored, including weather and climate codes such as ECMWF’s OpenIFS, the Met Office Unified Model, the NEMO ocean model, and UCAR’s Weather Research and Forecasting model. Early indications are extremely promising, with the Arm-based ThunderX2 nodes proving performance competitive with the latest leading x86 CPUs, while being significantly cheaper. This is the first time that Arm-based CPUs have been shown to be performance competitive for mainstream HPC workloads, while the improved cost competitiveness reverses the trend of the last 5 years, which has seen price/performance increases slow to a historical minimum.

There’s another, longer-term advantage that Arm-based processors can specifically bring to HPC: the potential for real co-design. This is a term that’s often bandied around, and while there’s some evidence of previous co-design efforts working at the system level, little progress has been made at the silicon chip level. This is understandable: at the chip level, HPC has been too small a market to warrant the traditionally large costs to customise CPUs for our applications. But the Arm ecosystem was designed specifically to make this possible. Arm has hundreds of customers, each designing bespoke processors based on Arm’s designs, and doing this much more quickly and at much lower cost than before. This raises the prospect of a “new golden age for computer architecture”, as identified by John Hennessy and David Patterson in their recent ACM/IEEE ISCA 2018 Turing Lecture.

In future, Arm-based processors could be highly customised for HPC, or specifically for weather and climate codes. This might mean adding instruction set extensions such as very wide vectors, or co-processors and accelerators for application-specific kernels. These processors should bring significant steps forward in performance for scientists around the world whose crucial science is being hampered by the relatively small improvements in performance and performance per Dollar we’ve seen in recent years. As such, Arm’s entry into the HPC market and the injection of new ideas, innovation and competition this brings, could trigger a revolution in scientific computing of the kind not seen since the commodity CPU revolution of the late 1990s. Exciting times are ahead.

Further information

For full details of the latest Isambard results, see the recent paper at the Cray User Group (CUG) workshop from May 2018:

Comparative Benchmarking of the First Generation of HPC-Optimised Arm Processors on Isambard. S. McIntosh-Smith, J. Price, T. Deakin and A. Poenaru, CUG 2018, Stockholm, May 2018.

The slides from the talk are also available. An extended version of the paper with additional results will appear in a special issue of the Journal of Concurrency and Computation: Practice and Experience (CCPE) later this year.

Banner image: spainter_vfx/iStock/Thinkstock