Peter Bauer, Deputy Director of Research and Programme Manager of the ECMWF Scalability Programme

One of the complex questions the ECMWF Scalability Programme is trying to answer is: which efficiency gains are actually needed and achievable for our future forecasting system?

This question is difficult to answer because model and data assimilation components are changing continually, which also has implications on their cost. One recent example is the introduction of a more continuous approach to data assimilation that incorporates the most recently received observations during the execution of the assimilation suite. This maximises the exploited volume of observations compared to a system with a fixed cut off time, but it has implications on computational cost, as having more observations may require more iterations in the minimisation. Another example is the output produced by the wave model for performance diagnostics and for generating additional products for selected users, which appears to explain a large part of the wave model cost, and is currently under review.

At the same time, we need to know where the absolute limits of computability lie, because this can inform us about the expected lifetime of key components like the spectral model dynamical core or the ocean model NEMO. Since reforming or replacing such components takes many years, information on basic computing limitations are essential for ECMWF’s research strategy.

Of course, computability and efficiency gain estimates are based on the results achieved with present computing technology. So, the Scalability Programme is also making a major investment in trialling alternative processor types, memory hierarchies, networks and workflows. For example: porting of weather and climate dwarfs to various CPU-type processors, GPUs and even FPGAs in the ESCAPE and EuroEXA projects; and the tests in the NextGenIO project with new NVRAM and NVMe memory layer technology, which are dramatically accelerating our product generation.

But running the entire ECMWF Integrated Forecasting System (IFS) on the biggest available HPC systems is still something else. While this is not testing future model and code options, it definitely provides us with quantitative estimates of present performance – and how this compares to other forecast models. This exercise has been performed successfully in the past. In the early days, Deborah Salmond and Mike O’Neill managed to run a few time steps on an 8 processor Cray-YMP, which had a theoretical peak for the entire system of 2.75 Gflops/s, at a 1988 Cray symposium in Minneapolis. David Dent received a Cray Gigaflops Award for breaking the gigaflops barrier with the spectral IFS soon after. The teraflops barrier was broken with the IFS in 2004 on 2048 IBM p690+ processors closer to home, using one entire cluster of ECMWF's computer.

A Cray Y-MP 8/8-64 was installed at ECMWF in 1990. This system had 8 CPUs with a cycle time of 6 nanoseconds (166 MHz), and 512 MB of memory. It was the first ECMWF supercomputer running the Unix operating system.

Tests using the Titan Cray System in Oak Ridge National Laboratory, US

A more recent example is ECMWF’s trials on an allocation on the Titan Cray-system in Oak Ridge National Laboratory (ORNL) by George Mozdzynski within the CRESTA project. Titan occupied first place on the top500.org list in November 2012. On this system, the IFS was scaled up to 220,000 cores, which was not possible with ECMWF’s own computer. Already then, we performed experiments with different ways to optimise computations and data communication and to overlap them as much as possible, reducing the time processors remain idle. Trying the same on our present Cray turned out to nearly eliminate this gain because the XC-40 has a much faster network and the MPI distributed memory standard evolved in the meantime. But it showed what to expect in a future scenario with more cores, a larger network, and slower clock speeds. This experience clearly showed that both software and hardware need to evolve together. The Oak Ridge facilities were also used for a big forecast production run in the Athena project, that successfully demonstrated the positive impact of model spatial resolution on representing climate variability.

Tests using Europe’s biggest supercomputer - Piz Daint Cray at CSCS in Switzerland

Mostly stimulated by the requirements from the Centre of Excellence in Simulation of Weather and Climate in Europe (ESiWACE) work on estimating the computability of present-day models like IFS and ICON last year, ECMWF ported the model onto the biggest European supercomputer, the Piz Daint Cray at CSCS (Swiss National Supercomputing Centre) in Switzerland.

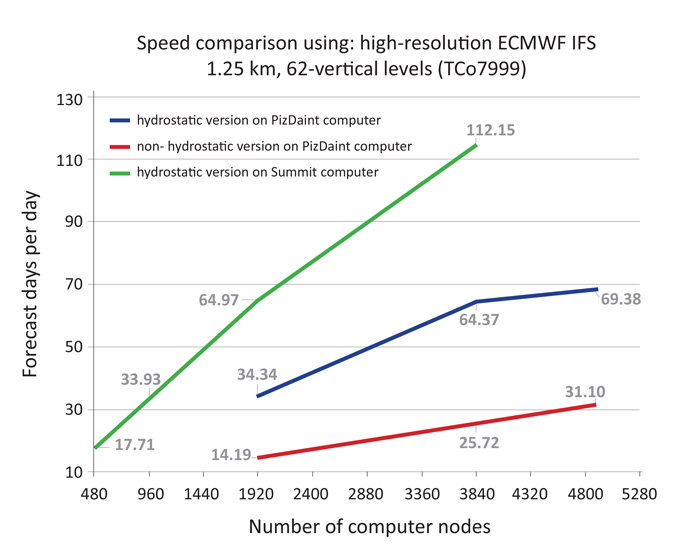

The model resolution was set to 1.25 km, which translates to about 16 billion grid points with the cubic-octahedral grid and 62 vertical levels. The model was scaled across the entire supercomputer up to 4880 compute nodes. This was without being able to use the GPU processors though, which are integrated on the same nodes as the CPUs of Piz Daint. On this allocation, the IFS achieved a maximum throughput of 69 forecast days per day, which is at least a factor of 4 short of the operational requirement. When also accounting for the cost of coupling, better vertical resolution and data input and output, this shortfall factor would be an impressive 200x. This was compared to the GPU capable COSMO model as operated by MeteoSwiss on Piz Daint. On similar terms, COSMO’s shortfall factor turned out to be about 65. While the tested IFS performed faster in absolute terms on Piz Daint, on the same number of nodes COSMO exploits both CPU and GPU, thus making much better use of the heterogeneous architecture of Piz Daint.

This information is extremely valuable for ECMWF as it tells us not only where we are right now but also about achievable gains. This comparison was published as part of a special issue on the ‘Race to Exascale’ in the IEEE Journal on Computing in Science and Engineering in early 2019.

Tests using the Summit IBM-machine in ORNL – currently the biggest system in the world

The next step in this story follows our research strategy seeking alternative options for the model’s dynamical core. A fundamental feature of the spectral model is the need to perform data communication across many tasks twice per time step to move back and forth between spectral and grid-point space. The more tasks that are run, on more compute nodes, the more communication is involved. Generally, moving data consumes about 10 times more energy than performing the actual calculations, so global data communication is generally perceived as bad. The alternative IFS dynamical core, based on a finite-volume discretisation, performs much less data communication and predominantly between neighbouring areas, so should perform better on wider networks across large supercomputers.

To test this hypothesis and to exploit accelerators, ECMWF submitted a project proposal to the US INCITE programme that allows cutting-edge computations to be run on the world’s largest machine (top500.org, November 2018). This proposal has indeed been successful and ECMWF was granted one-year access to the Summit IBM-machine in ORNL. Summit combines 2 Power9 CPUs with 6 NVIDIA Volta GPUs on each of the ~4600 nodes, again in a hybrid configuration, but this time with a well-connected network for better exploiting the massive parallelism of the attached GPUs on each node.

The Summit IBM-machine in the Oak Ridge National Laboratory (ORNL), has 2 Power9 CPUs and 6 NVIDIA Volta GPUs on each of the ~4600 nodes. Credit: OLCF at ORNL. Image reproduced under Creative Commons Licence (Attribution 2.0 generic).

Through this award, ECMWF will run experiments along two strands:

- Firstly, to perform a spectral–finite volume model head-to-head comparison on the biggest possible CPU allocation to explore the limits of the spectral model performance.

- Secondly, to port as many IFS components as possible to GPUs and repeat the tests on the hybrid architecture.

These simulations will be instrumental for ECMWF’s research strategy and inform the Scalability Programme about key sources of efficiency gains and where to strengthen development efforts.

A first test with the spectral IFS has already been carried out to compare with the Piz Daint runs. At 1.25 km, the IFS achieved 112 forecast days per day on Summit, which makes it about two times faster on the same number of nodes (see figure below). However, this does not mean the same energy was used, which is another highly relevant question, and one of the reasons why we need to learn how to use the attached GPUs much more effectively so that we can exploit the entire power supplied to the CPU-GPU node.

These are early but interesting times, and while porting code to different computers can be tedious, the benefit will pay for this effort many times over in the future.

Speed-up on Summit and on Piz Daint for the 1.25 km, 62-vertical level IFS (TCo7999) hydrostatic (H) and non-hydrostatic (NH), using CPUs only with a hybrid MPI/OpenMP parallelisation. The Piz Daint Cray has 12 cores per node, Summit IBM has 42 cores.

Credits/sources

This research was performed by Nils Wedi, Andreas Mueller and Sami Saarinen. The information on Deborah Salmond’s, Mike O’Neill’s and David Dent’s experience was provided by Adrian Simmons. The results on Summit used resources of the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725. The resources on Piz Daint were kindly provided by Thomas Schulthess, CSCS.

Banner credit: OLCF at ORNL. Image reproduced under Creative Commons Licence (Attribution 2.0 generic).