Future developments in high-performance computing will place even more emphasis on parallel processing. They promise greater energy efficiency but rely on parallelisation at levels to which current numerical weather prediction (NWP) codes are not adapted. Making codes more scalable to run on more processors is among the top priorities in NWP.

Through its Scalability Programme, ECMWF is involved in a range of projects designed to cover the entire NWP processing chain, from processing and assimilating observational data to delivering forecasts to Member and Co-operating States and other licensed users.

One of these projects, ESCAPE, is about providing better insight into the computing performance and energy-efficiency of those model components that drive overall cost. ESCAPE stands for Energy-efficient Scalable Algorithms for Weather Prediction at Exascale. Funded by the EU and coordinated by ECMWF, the three-year project started in October 2015 and combines the expertise from 12 world-leading global and regional numerical weather prediction centres, academia, high-performance computing centres and hardware vendors.

Weather and climate dwarfs

The project’s innovative approach is to extract elements called ‘dwarfs’ from a weather prediction model for adaptation to different hardware options. Dwarfs are key functional components that also impose specific computational challenges on the system. Working at dwarf level allows problem-specific code adaptation, optimisation and benchmarking, and also facilitates knowledge dissemination and training. The experience gained will provide guidance for model and hardware configurations that provide the best cost–benefit relationship across all applications considered in ESCAPE.

Early in the project, eight dwarfs were extracted from ECMWF’s Integrated Forecasting System (IFS). Of these, four have been ported and assessed on different processor types.

ECMWF’s flexible and parallel data framework Atlas, which serves as the underlying data structure for many of the dwarfs, has been used to adapt the dwarf code. This makes it possible to perform model field operations on various grid/mesh options in a unified and parallelised way. Atlas also links to the well-established GridTools library developed by MeteoSwiss, ETH Zurich and the Swiss National Supercomputing Centre (CSCS). GridTools is a software layer that employs a domain-specific language concept to separate science code from hardware-dependent libraries. This concept is being further extended in the second phase of the project and promises great potential for future Earth system model code development.

Efficiency gains

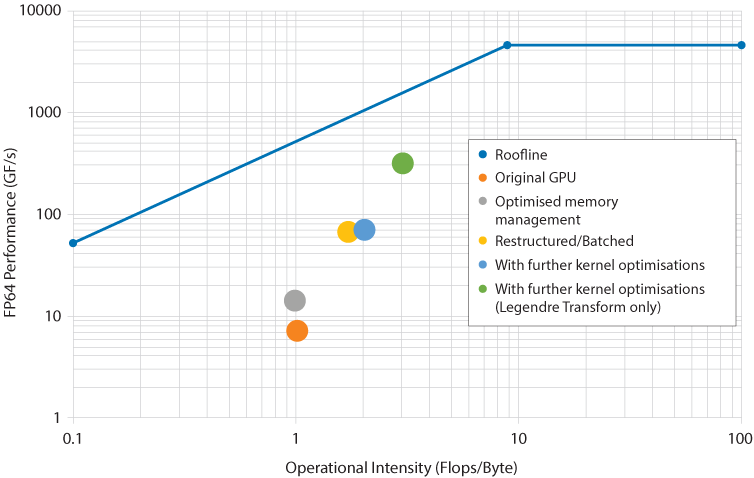

The performance metrics follow the so-called Roofline model, which uses the ratio of the number of performed calculations and the amount of data being moved across the memory hierarchy to assess performance. Since energy-efficiency is at the heart of the ESCAPE project, this ratio is essential for estimating energy cost because moving data is so much more expensive than computing itself. Measurements and simulations of energy cost make it possible to identify sources of efficiency gains and to extrapolate energy cost estimates to scale.

For the Spherical Harmonics dwarf, for example, the project team investigated different memory management approaches using the Intel compiler suite on the Xeon Broadwell and Xeon Phi architecture. The impact of clock boosting and vectorisation was also considered. This dwarf is particularly relevant for the IFS as it relies on a spectral discretisation in space, which implies performing costly spectral transforms between spectral and grid-point space at every time step. In regional, limited-area model configurations of the IFS, spectral transforms consist of Fourier methods in two spatial dimensions (BiFourier Transform dwarf); in the global model, Fourier and Legendre transforms are combined (Spherical Harmonics dwarf).

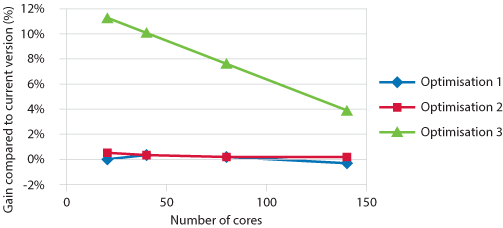

Other code modifications focused on vectorisation, which aims to maximise the amount of variables to which the same operations are applied. The performance impact of these optimisations is shown in the chart below. While some of the initial transformations did not lead to a significant improvement in performance, optimisation no. 3 led to a performance boost of between 4% and close to 12% on a single core. This option combined vectorisation and memory layout optimisations.

First optimisation results on Xeon for the Spectral Transform–Spherical Harmonics dwarf: Optimisations 1 and 2 represent vectorisation improvements and optimisation 3 data layout optimisation (data courtesy of P. Messmer, NVIDIA).

For the BiFourier Transform dwarf, the CPU investigations were also focused on vectorisations, and a 17.6% speed-up was achieved on an Intel Xeon Haswell-based node.

On the GPU architecture, investigations targeted an OpenACC implementation of the Spherical Harmonics dwarf. Profiles of the original implementation initially focused on the Fourier transform part of the algorithm, revealing two areas for optimisation: minimising data layout transformations, especially transpositions, as well as increasing the amount of calculations for the Fourier transforms. By changing the data layout and grouping the fast Fourier transforms (FFTs) into batches of the same size, an acceleration close to a factor of 2 was achieved.

GPU optimisation of the Spherical Harmonics dwarf with double-precision floating point (FP64) format (data courtesy of P. Messmer, NVIDIA).

To return the dwarf experience back into entire models, limited-area prediction models have been installed at ECMWF that will serve as reference standards for performance evaluations once selected dwarfs are reintegrated into the models. These reference installations also include the use of the Atlas library. This capability will eventually make it possible to gauge the impact of running optimised dwarfs on novel hardware in full-sized forecast systems.

Experience and results from the first half of the project have been shared through workshops and training. In August 2107, the ESCAPE Young Scientist Summer School in Copenhagen, Denmark, was attended by 28 students from 12 countries. Lecture materials are available on the ESCAPE website. ESCAPE also held its second dissemination workshop in September 2017 in Poznan, Poland, attended by some 40 researchers from Europe and the United States. Close collaborations have been established with two centres of excellence (ESiWACE and POP) and the EuroEXA FET project.

Looking ahead

The design and implementation of the dwarfs through a common, well-defined library is a major step towards re-structuring existing computational kernels for weather forecasting. The mid-term review report describes the dwarf kernels as building blocks for exascale-ready solutions, which will impact the whole weather forecasting community.

Since the review, the work of the ESCAPE consortium has focused on the upcoming release of the second batch of dwarfs, including two MPDATA dwarfs that perform advection calculations in the future IFS finite-volume model option, an elliptic solver and a radiation dwarf. Further, selected dwarfs have been ported to different architectures (GPU, KNL).

The Atlas library will be released to the public as open source early in 2018. In total, 12 deliverables will have been produced by the end of 2017, of which 7 will be accessible publicly.

ESCAPE partners

- ECMWF (Coordinator), International

- Météo-France, France

- Institut Royal Météorologique de Belgique, Belgium

- Danmarks Meteorologiske Institut, Denmark

- Federal Office of Meteorology and Climatology, Switzerland

- Deutscher Wetterdienst, Germany

- Loughborough University, United Kingdom

- Irish Centre for High-End Computing, Ireland

- Poznan Supercomputing and Networking Centre, Poland

- Atos/Bull, France

- NVIDIA, Switzerland

- Optalysys, United Kingdom

|

ESCAPE is funded by the European Commission under the Future and Emerging Technologies - High-Performance Computing call for research and innovation actions, grant agreement 671627.

|