ECMWF is hosting a meeting of Cray supercomputer users in London from 8 to 12 May. The head of supercomputing at ECMWF, Mike Hawkins, has a special interest in the event.

The Cray User Group conference 2016 is an opportunity for users to exchange ideas with Cray and among themselves.

In addition to weather and climate centres, customers of the US supercomputer manufacturer include a wide range of businesses and research centres.

“This is an excellent opportunity for us to meet a lot of different people and to share our experience with them,” says Mike, the Head of the High-Performance Computing and Storage Section at ECMWF.

The meeting, which brings together more than 200 Cray users and representatives, comes at a time when the ever-growing demand for computing power can no longer be met by ever faster processors.

Supercomputer manufacturers and users are therefore investigating alternative solutions.

Growing demand

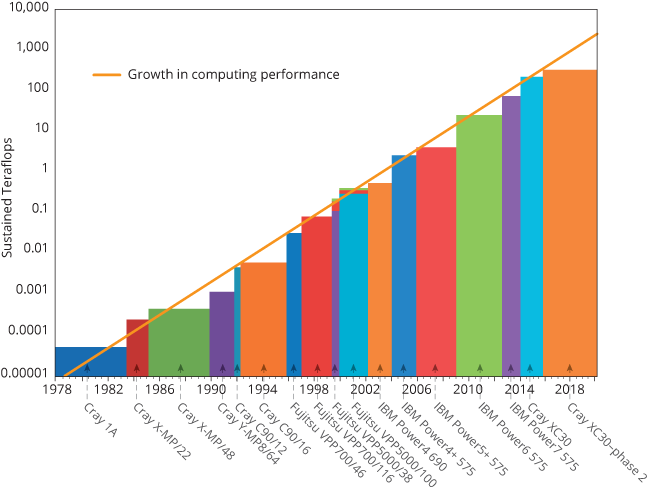

Supercomputers have been around since the sixties, but the computing power of early models was tiny compared to today’s high-performance computing (HPC) facilities.

The Cray-1A on which ECMWF’s first operational forecast was produced mustered a peak performance of around a tenth of the computing power of a modern smartphone.

“The peak performance of the Cray XC30 system used by the Centre today is 21 million times greater, equivalent to a stack of smartphones more than 15 km tall,” Mike points out.

This is the kind of performance needed to run ECMWF’s highly successful global numerical weather prediction model.

ECMWF’s current Cray XC30 system is being upgraded to an XC40 system.

Computing power at the Centre will have to grow further to meet the demand for more accurate and reliable weather forecasts, especially of severe events.

The reason is that better forecasts require finer model grid resolutions, more realistic representations of relevant Earth system processes, and the assimilation of more observations.

But the growth in computing performance is coming up against some obstacles.

Scalability

“Computer processors stopped getting faster in 2004,” says Mike, who has been working in high-performance computing for the best part of three decades.

After completing a degree in ‘Computer and Microprocessor Systems’ at the University of Essex back in 1987, he worked in scientific computing at the Atomic Weapons Establishment in Aldermaston, UK, for 23 years before joining ECMWF in 2010.

Mike points out that today the main way to increase the performance of supercomputers is by carrying out more operations in parallel.

This means traditional computer code has to be adapted – made more ‘scalable’ – so that it can run on novel, massively parallel hardware architectures.

ESCAPE is an EU-funded scalability project coordinated by ECMWF. It brings together 12 partners from eight countries and aims to develop world-class, extreme-scale computing capabilities for European operational numerical weather prediction and future climate models. In addition to ESCAPE, ECMWF is involved in a number of other scalability-related projects, such as ESiWACE and NEXTGenIO.

Mike gives the analogy of a man building a house brick by brick. To speed up the process, he can lay the bricks faster and faster, but this works only up to a point.

Putting four people on the job, one for each wall, will speed things up more, but then the corners will have to be coordinated. Four people is a relatively small level of parallelism, so coordinating their work is not too onerous.

But what happens if you give 100 people a pile of bricks each to build a house? That’s a much greater level of parallelism, and it requires a lot more time for coordination tasks.

“That’s where we are today on scalability. Where we want to be in the future is probably to have a 100,000 people build the house, though by then the house is probably a skyscraper and we need to stop using bricks,” he says.

Greater communication between different parts of the system also requires more data to be moved around the system, using energy and giving rise to potential input/output bottlenecks.

“The system as a whole is getting more and more complicated – the easy ride has stopped,” Mike warns.

Since all supercomputer users face similar issues, this year’s Cray User Group meeting is devoted to the theme of scalability.

Storage

Greater computing power goes hand in hand with the production of more data.

“The amount of data we put into our archive goes up roughly in proportion to the size of the HPC system,” Mike observes.

This can pose problems of its own.

The data is stored on tape, “a wonderful product as it provides for dense storage and needs no electricity when not being used”.

But growth in tape capacity has slowed. This means a rapidly increasing amount of tape is required to hold ECMWF’s data, which grows by more than 50% every year.

By the end of 2015, the archive held about 140 petabytes of data, to which about 140 terabytes was added every day. “In March 2016 alone we added more data to the archive than was in the archive in November 2006,” Mike points out.

At about 3.5 million pounds a year, spending on the data handling system and data storage currently accounts for more than a fifth of ECMWF’s combined budget for storage and high-performance computing.

Renewal

On the plus side, tape has a much longer renewal cycle than the high-performance computing facility, which is replaced about every five years, normally with an upgrade in between.

The Centre’s current supercomputer became operational in September 2014 and is currently being upgraded from Cray XC30 to Cray XC40 systems.

Sustained HPC performance at ECMWF has increased exponentially since 1978.

"A full replacement is scheduled for 2020 and planning for that will have to start as early as next year," Mike observes.

The Centre’s scientists will have to work out what they need, funding will have to be secured, invitations to tender will have to go out, and bids will have to be assessed.

Even after the new machine is installed, six to nine months of parallel running will be required to make sure forecasts can be produced on the new system.

“In the meantime, we are keeping in touch with vendors,” says Mike. “A big plus this time is our Scalability Programme, which will put a lot of upfront intelligence into how we look at the new system.”

Top photo: Mike Hawkins in front of ECMWF's Cray Sonexion storage system.