How fast can weather prediction models be adapted to work on tomorrow’s supercomputer architectures?

This is one of the questions ECMWF scientist Nils Wedi will address in his talk at a supercomputer workshop which opened at the Centre on 24 October.

The five-day Workshop on High-Performance Computing in Meteorology comes at a time when ECMWF has ambitious plans to extend the skilful range of its forecasts by increasing the model’s spatial and temporal resolution while adding more realism to it. This will require much greater computing power than is currently available to the Centre.

As a meteorologist who specialises in numerical methods, Dr Wedi is well aware of the size of the challenge.

“We can’t just make our models ever more complex without taking into account the computational cost and the associated supercomputer energy consumption,” he warns.

“Two things need to come together,” he explains. “On the one hand, we are looking for supercomputer architectures which can handle tomorrow’s weather prediction models. On the other, we also need to adapt our models so that they run efficiently on those architectures.”

Dr Wedi’s interest in meteorology goes back to his teens, when he trained to become a glider pilot.

He joined ECMWF in 1995 after completing a master’s degree in meteorology. In 2005, the Ludwig Maximilian University of Munich awarded him a PhD for his work on ‘time-dependent boundaries in numerical models‘.

Having been in charge of numerical aspects in ECMWF’s Research Department, he was recently appointed Head of the Earth System Modelling Section, where he works with a team of experts on the challenges ahead.

Growing complexity

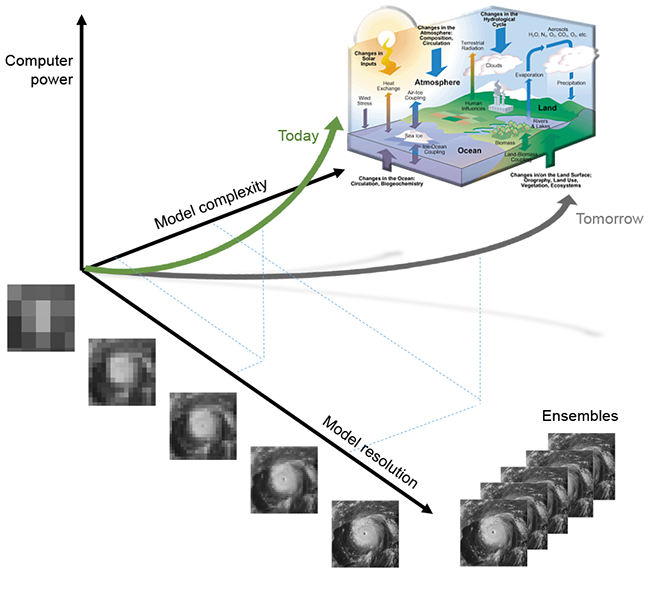

One of the sources of growing complexity in numerical weather prediction (NWP) is the inclusion of more Earth system components in NWP models to increase the accuracy of forecasts.

Efforts are under way to limit the need for greater computer power as model complexity and model resolution grow.

ECMWF’s Integrated Forecasting System (IFS) already takes into account interactions between the atmosphere, the oceans, ocean waves, sea ice, the land surface and atmospheric composition in the global weather forecasts it produces.

However, it is planned to make these interactions much more dynamic with rapid exchanges of information between the different Earth System components.

“The more processes you take into account, the more information needs to be exchanged between computer nodes in a very short period of time,” Dr Wedi observes.

Higher resolution

The need to increase the spatial resolution of Earth System models poses another computational challenge.

Currently ECMWF operates the highest-resolution global data assimilation and weather forecast model in the world with a quasi-uniform distribution of 9 km-wide grid boxes.

It also has the capability to conduct research at a grid spacing as small as 1.3 km, corresponding to 256.8 million grid points per vertical level.

Experiments at this kind of resolution can give vital insights into how small-scale effects, typically modelled in subgrid-scale parametrizations, influence large-scale global predictions of weather and climate.

They have become possible as a result of a recent supercomputer upgrade from two Cray XC30 to two Cray XC40 systems, each with more than 130,000 cores of the latest Intel Xeon ‘Broadwell’ processor.

ECMWF’s two Cray XC30 supercomputers were upgraded to Cray XC40s earlier this year.

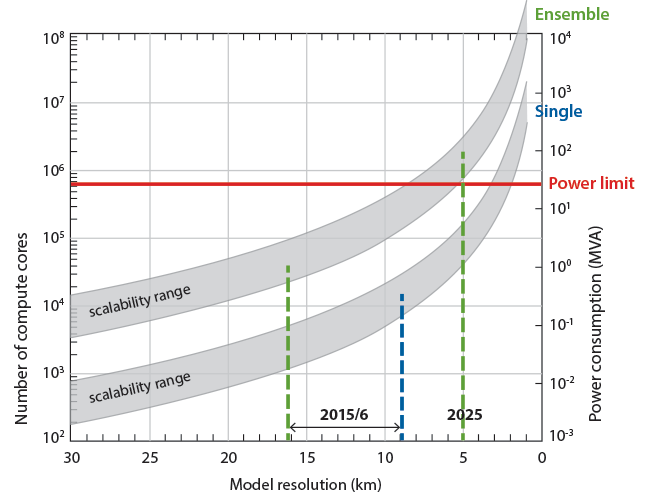

“By 2025 we want to be able to run an ensemble of analyses and forecasts at a grid spacing of 5 km, requiring predictions and data processing for billions of grid boxes in less than a second,” Dr Wedi points out.

“This is necessary for the forecasts still to be produced in the same amount of time as now.”

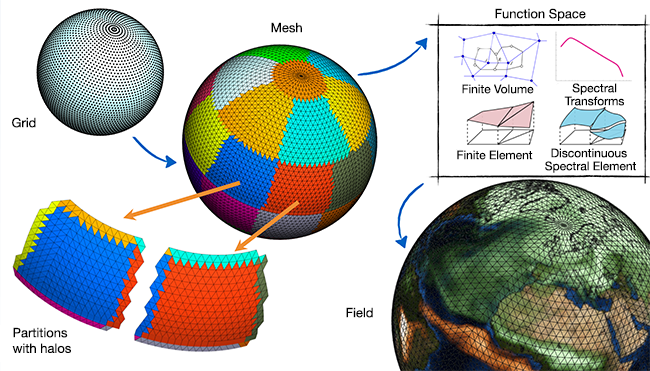

Finding the numerical methods which are best adapted to the task is an active area of research.

“The spectral transform method we currently use requires a lot of data communication. This may make it very costly to build a computer with sufficient cable connections between the required nodes,” Dr Wedi says.

“We are therefore investigating alternatives, such as finite volume methods and other discretisations as well as hybrid approaches.”

One of the ways to keep computational cost down is to find clever ways to arrange the model’s grid points. The grid used at ECMWF is shown here for illustrative purposes at a much lower resolution than the operational one. It is designed to work well with numerical methods which may be used in the IFS in the future. The mesh constructed from the grid is partitioned into areas between which information can be exchanged efficiently via ‘halos’. Mesh information is combined in alternative 'function spaces' to evolve the physical fields in time.

New architectures

Computer processors stopped getting faster more than ten years ago. Since then, computing performance has increased mainly as a result of using more processors arranged in units that require much less energy per processor.

Future supercomputing architectures providing exascale capabilities will thus rely on massively parallel computing with a hierarchical arrangement of compute capability.

“All aspects of the forecasting system will have to be adapted so that it can run efficiently on such architectures,” Dr Wedi says.

The issue is being addressed by ECMWF’s Scalability Programme. Two of the projects feeding into it are the EU-funded ESCAPE project (Energy-efficient Scalable Algorithms for Weather Prediction at Exascale) and the ERC-funded PantaRhei project hosted at ECMWF.

The chart indicates that ECMWF’s ability to continue to increase the resolution of its forecasts, while keeping power consumption within sustainable limits, depends very much on the success of the Scalability Programme. The number of required compute cores, and hence the amount of power consumed, rises rapidly as the resolution of single ‘deterministic’ forecasts on the one hand and ensemble forecasts on the other increases.

“ESCAPE aims to break up NWP code into smaller parts, optimise them for energy efficiency, use them for architectural co-design, and ultimately reassemble them into the global model,” Dr Wedi explains.

An ESCAPE dissemination workshop took place at the Danish Meteorological Institute (DMI) from 18 to 20 October. It brought together ESCAPE partners and the leading NWP consortia in Europe to exchange views on the best way forward to address the challenges for weather forecasting.

The PantaRhei project is exploring new mathematical tools combining the strengths of well-established methods in numerical weather prediction with methods originating from other computational fluid dynamics disciplines.

“Ultimately the Scalability Programme is about our ability to keep improving our weather forecasts while using computers in an environmentally sustainable manner,” says Dr Wedi.

“Both these goals are part of our new ten-year Strategy and we must work on them in tandem.”