The next generation of numerical weather prediction (NWP) systems will be based on highly parallel and flexible data structures. Willem Deconinck is at the forefront of developing the necessary numerical techniques and software tools.

His background reflects the many different skillsets that need to come together for successful NWP.

During his master’s degrees in mechanical engineering and aerospace engineering, he specialised in turbulence modelling and fluid dynamics, which underlies the dynamical core of NWP models.

As part of subsequent doctoral research, he helped to develop new high-order spectral element fluid dynamics code for high-performance computing (HPC).

“It is during this research project that I acquired the coding and HPC skills that are also crucial in NWP,” Willem says.

In numerical weather prediction, the methods used to solve the equations that describe atmospheric flow are translated into code that can be read by supercomputers. (Photo: A. Brookes/ECMWF Copernicus)

In 2012, when he started to work on numerical aspects in ECMWF’s Research Department, the PantaRhei project was getting under way to develop an alternative dynamical core option particularly suitable for highly parallel computing.

Existing software tools were not able to adequately support this work. Willem had to develop something new: Atlas.

“It was evident that Atlas could be of benefit to the wider community,” Willem says. “Today it is a software library with a wide range of applications in the development of Earth system model components and data processing on a sphere.”

After years of development, Atlas has now been publicly released.

New numerical module

The first use of Atlas was in the development of an alternative scalable dynamical core module for ECMWF’s Integrated Forecasting System (IFS).

The idea is for this finite volume module (FVM) to be computationally efficient even at very high spatial resolutions. To achieve this, it has to work well on emerging energy-efficient and heterogeneous HPC hardware.

The FVM uses the finite-volume method for the approximate integration of partial differential equations used to describe global atmospheric flow.

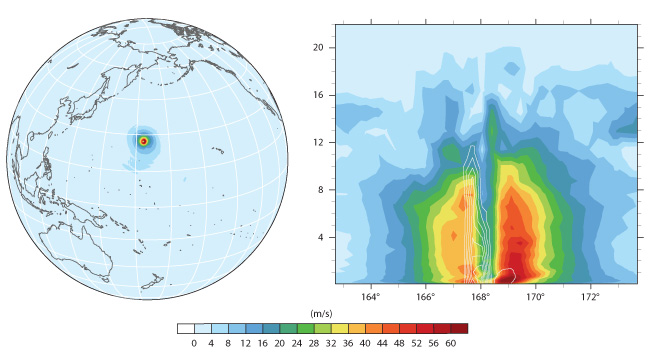

An idealised tropical cyclone simulation after 10 days using the FVM. The globe image shows the near-surface wind magnitude surrounding the cyclone, and the chart provides a view of a vertical section across the cyclone showing the wind magnitude (shading) and rain-water mixing ratio (contours).

“I was part of the team that developed the FVM. My task was to provide the initial parallel code and the kind of flexible parallel distributed data structures and mesh generation tools needed for the module,” Willem says.

Developing Atlas

One of the advantages of the finite-volume method is that it can easily be formulated for unstructured meshes on a sphere.

“This is where Atlas comes in,” Willem says. “The Atlas framework provides parallel, flexible, object-oriented data structures for both structured and unstructured meshes.”

For the purposes of the FVM, Atlas divides the globe into many subdomains. Computing tasks are distributed between these for parallel processing.

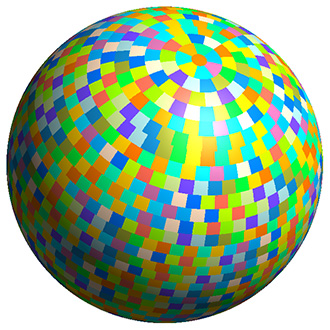

Atlas divides the globe into equally sized subdomains. In this example, there are 1,600 such subdomains based on the N1280 octahedral reduced Gaussian grid.

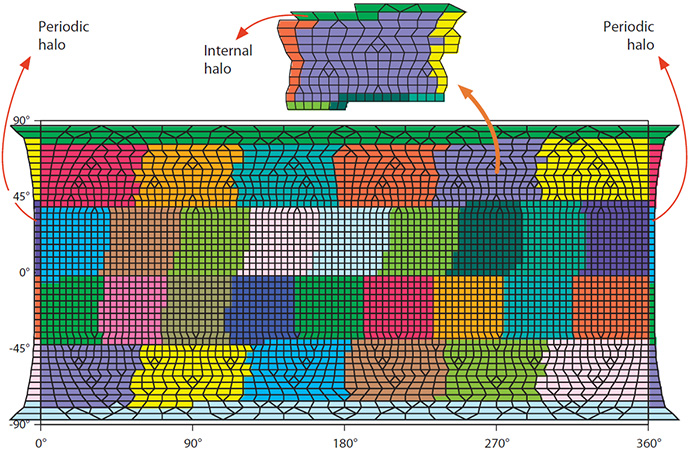

Atlas also provides efficient communication patterns between the subdomains. It does this by defining ‘halos’ around them.

“The FVM requires only nearest-neighbour communications for halos of adjacent subdomains, so the halo thickness is limited to one mesh element,” Willem explains.

“Data communication via halos is one of the strengths of the FVM: it does not require the kind of global communication that is needed in the spectral transform method currently used in the IFS.”

Computational subdomains are surrounded by ‘halos’ to enable the required communication between nearest-neighbour elements of the primary mesh.

New interpolation package

It soon became clear that Atlas would have wider applications than just the FVM.

When the ageing EMOSLIB interpolation software had to be replaced, it turned out that Atlas could help in the development of a new solution.

The new MIR (Meteorological Interpolation and Regridding) package supports unstructured grids. “Like the FVM, it needed the kind of data infrastructure provided by Atlas,” Willem says.

“I closely collaborated with the developers of MIR so that they could use Atlas to implement MIR data structures and numerical techniques.”

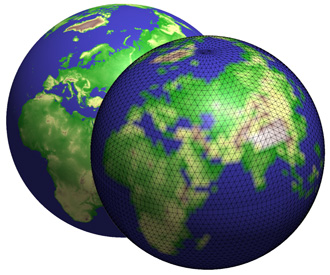

Surface geopotential height field (in m2 s-2) interpolated from an octahedral reduced Gaussian grid TCo1279 (high-resolution operational grid, back) to low resolution TCo31 (front) using MIR. The colour palette is qualitative, for illustrative purposes only.

Scalability Programme

Atlas is also proving useful to the collaborative EU-funded ESCAPE project, which aims to prepare NWP for the exascale era of supercomputing.

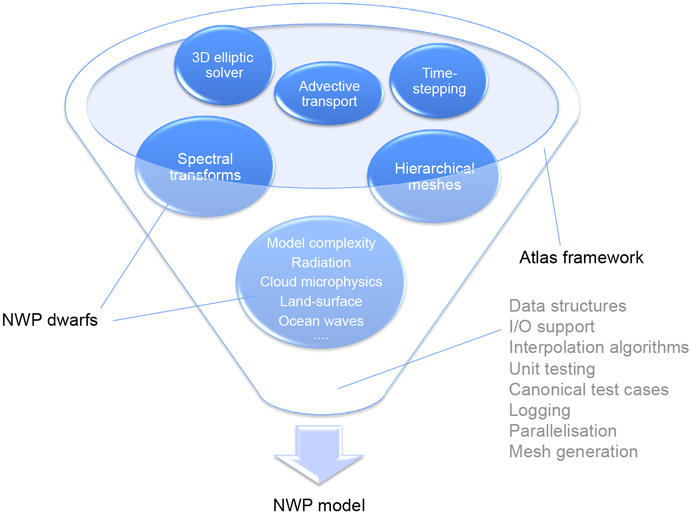

One of the aims of ESCAPE is to break down NWP code into ‘weather and climate dwarfs’ which can be optimised for different hardware configurations before being reintegrated into NWP models.

Many of the dwarfs are being developed using Atlas’s object-oriented data structures.

“Reintegrating the dwarfs into NWP models is easier if Atlas is used as a common foundation,” Willem says.

Atlas provides common data structures for the weather and climate dwarfs developed as part of the ESCAPE project.

Accelerators such as graphics processing units (GPUs) are expected to become increasingly important in future hardware configurations. Atlas makes it possible to seamlessly copy fields from a CPU to a GPU and back.

Willem brings some of this work together in the PolyMitos project. For example, he is reintegrating some of the dwarfs developed in ESCAPE into the IFS.

The FVM, MIR, ESCAPE and PolyMitos are all related to ECMWF’s Scalability Programme: they aim to modernise NWP processes so that they will run as efficiently as possible on future HPC architectures.

“Atlas helps us to have the flexibility we need for the success of the Scalability Programme.”